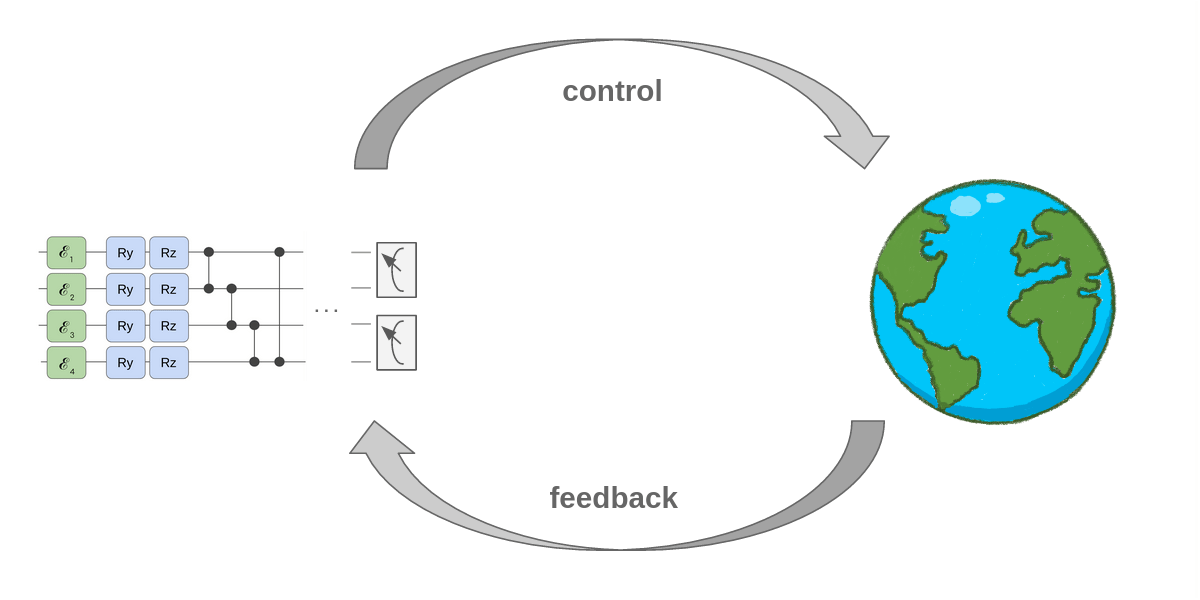

Research in Quantum Machine Learning (QML) has focused primarily on variational quantum algorithms (VQAs), and several proposals to enhance supervised, unsupervised and reinforcement learning (RL) algorithms with VQAs have been put forward. Out of the three, RL is the least studied and it is still an open question whether VQAs can be competitive with state-of-the-art classical algorithms based on neural networks (NNs) even on simple benchmark tasks.

Researchers at Leiden University and Volkswagen Data:Lab introduced a training method for Parametrized Quantum Circuits (PQCs) that can be used to solve RL tasks for discrete and continuous state spaces based on the deep Q-learning algorithm.

They investigated which architectural choices for quantum Q-learning agents are most important for successfully solving certain types of environments by performing ablation studies for a number of different data encoding and readout strategies. They provided insight into why the performance of a VQA-based Q-learning algorithm crucially depends on the observables of the quantum model and showed how to choose suitable observables based on the learning task at hand.

To compare their model against the classical DQN algorithm, they performed an extensive hyperparameter search of PQCs and NNs with varying numbers of parameters. They confirmed that similar to results in classical literature, the architectural choices and hyperparameters contribute more to the agents’ success in a RL setting than the number of parameters used in the model.

Finally, we showed when recent separation results between classical and quantum agents for policy gradient RL can be extended to inferring optimal Q-values in restricted families of environments.