In the race toward practical quantum computing, researchers have established critical boundaries on how effectively we can combat errors in quantum devices without using adaptive operations. A new theoretical framework reveals that certain limitations are not mere technical hurdles but fundamental constraints that no error mitigation strategy can overcome.

The growing collection of Noisy Intermediate-Scale Quantum (NISQ) devices promises computational advantages through the control of dozens to hundreds of qubits. However, these devices face a persistent enemy: accumulated errors from imperfect quantum gates that eventually destroy any quantum advantage. While full quantum error correction exists as a theoretical solution, it requires adaptive operations that current NISQ hardware cannot reliably perform.

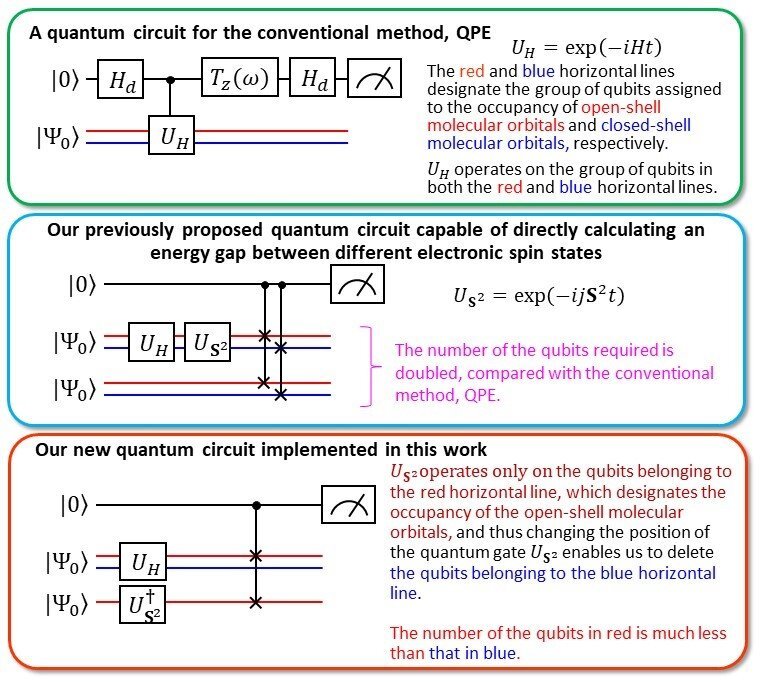

This technological gap has spawned diverse error mitigation techniques, including zero-error noise extrapolation, probabilistic error cancellation, and virtual distillation. These methods share a common approach – they avoid adaptive operations by running noisy quantum circuits multiple times and applying classical post-processing to the results.

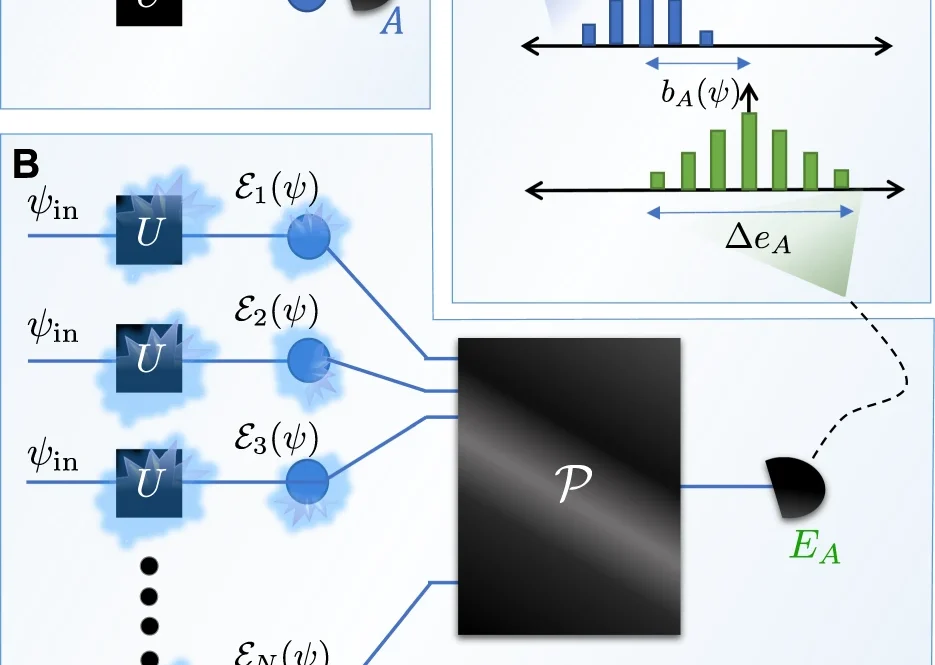

The newly established framework provides a universal benchmark for error mitigation performance through “maximum estimator spread” – essentially quantifying how many additional device runs are needed to achieve a specified accuracy threshold. The research derives fundamental lower bounds that no error mitigation strategy can surpass, expressed in terms of how noise reduces the distinguishability between quantum states.

Two significant findings emerge from these theoretical bounds. First, when mitigating local depolarizing noise in layered quantum circuits, the sampling overhead scales exponentially with circuit depth for any error mitigation protocol. This confirms that the exponential growth in estimation error observed in existing techniques isn’t just a limitation of current methods but a fundamental obstacle for all possible approaches.

Second, the research demonstrates that probabilistic error cancellation – a prominent existing technique – is actually optimal among a broad class of strategies when mitigating local dephasing noise across arbitrary numbers of qubits.

This work parallels historical developments in thermodynamics, where Carnot’s theorem established the ultimate efficiency limits for all heat engines. Similarly, these new bounds on quantum error mitigation provide researchers with clarity about what goals are physically impossible and which existing methods are already approaching theoretical optimality. The findings represent an important step toward understanding the inherent limitations in near-term quantum computing and will help direct future research efforts more efficiently.

npj Quantum Information, Published online: 22 September 2022; doi:10.1038/s41534-022-00618-z